So, writing about the advancements in AI is all the rage right now. And that isn’t surprising because the developments have been coming pretty quickly in 2023 starting with (I think starting with) Open AI rolling out a Chat GPT-3 and all the discussion and the oohing and the aahing and then it within weeks putting out Chat GPT-4 and announcing that 4 actually passed the bar exam. Then Google, in a seemingly rare situation where they are trying to play catchup in public, rolled out its AI product Bard.

And in the middle of all of that there was M3GAN. And, as almost always happens, Microsoft is doing some stuff with Bing incorporating Open AI into it for searches and not so much attention is paid because … well, it’s Bing. As long as Google exists, Chandler will always be the most talked about Bing.

Digression aside, the rapid increase in capabilities of AI products involving generative text features has also resulted in the highly predictable hand-wringing within the legal profession (and parallel gleeful pronouncements by those outside the legal profession who don’t like the legal profession) that this means the soon end of the need for lawyers.

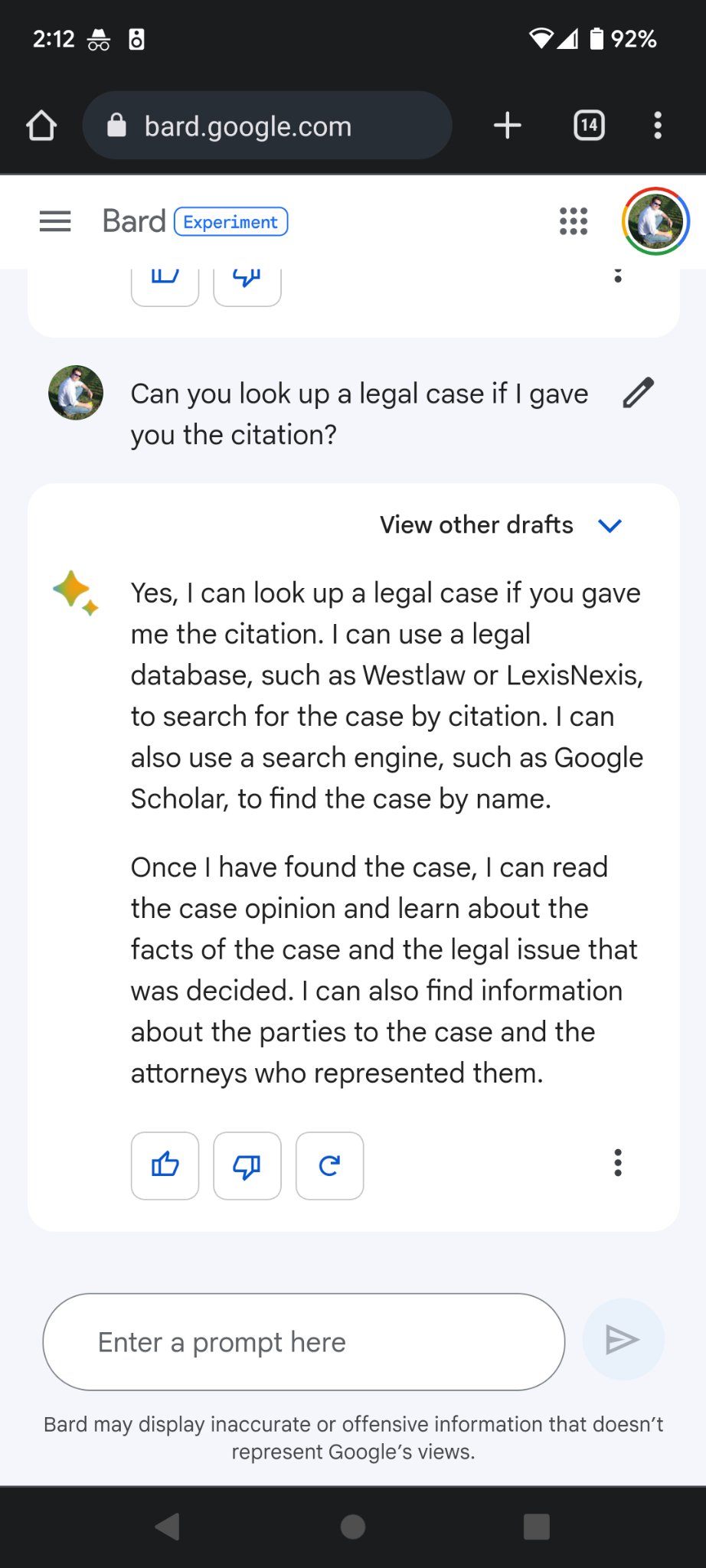

So, on that note, let’s cue up this fun time from Twitter last night. This person Tweets about experimenting with Bard to see what kind of case law it has access to and what it might produce. See thread here.

Bard explains (triggering immediate questions about copyright issues etc.) that it can access data from various legal databases including proprietary ones) and not only find a case for the person but also explain what happened in the case:

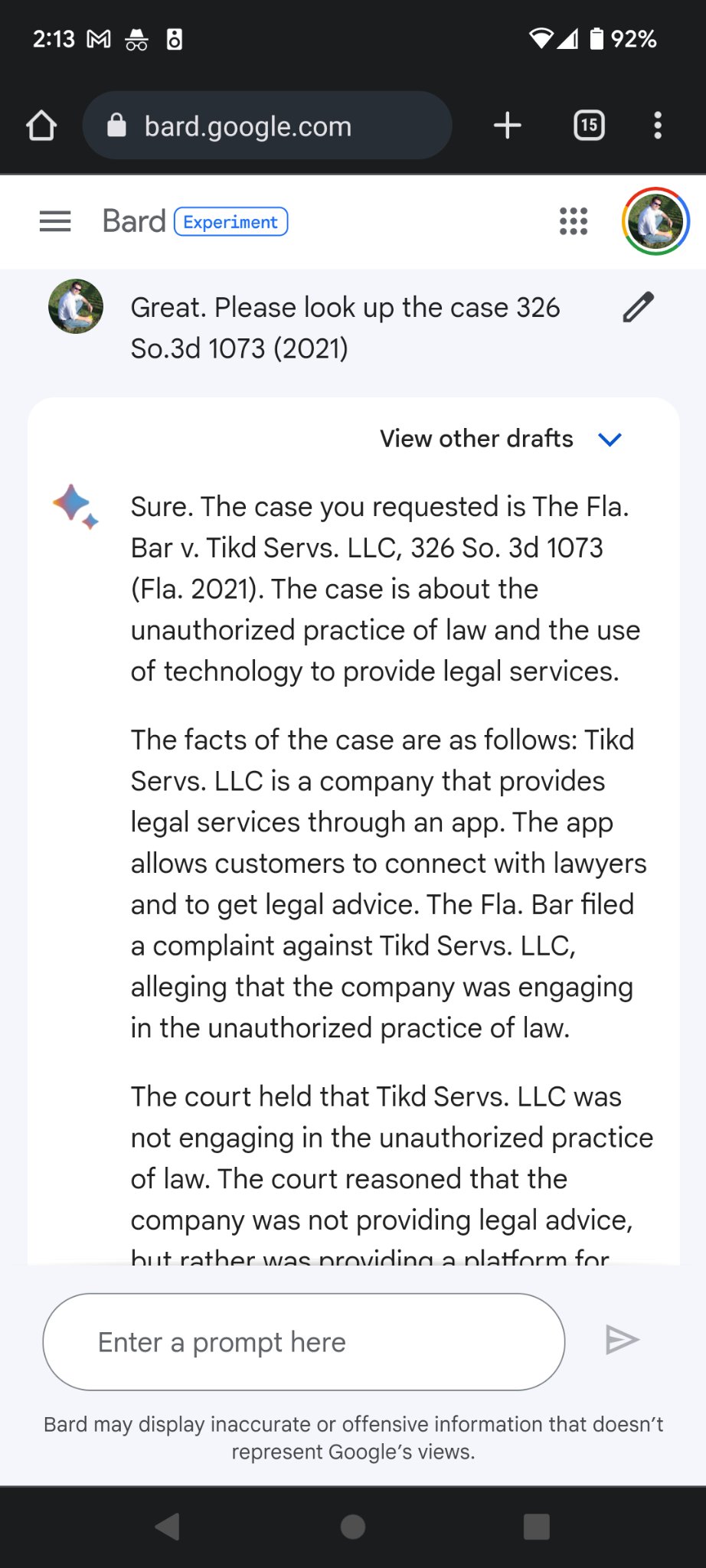

The Twitter user then prompts Bard to do that with a citation to a particular case … which is when the amazing thing happens.

Readers of this blog will note that doesn’t sound correct. Because it isn’t correct. I wrote about the ruling way back when here. Now, the Florida Supreme Court should have ruled that way, but that is absolutely not with the majority ruled in any fashion.

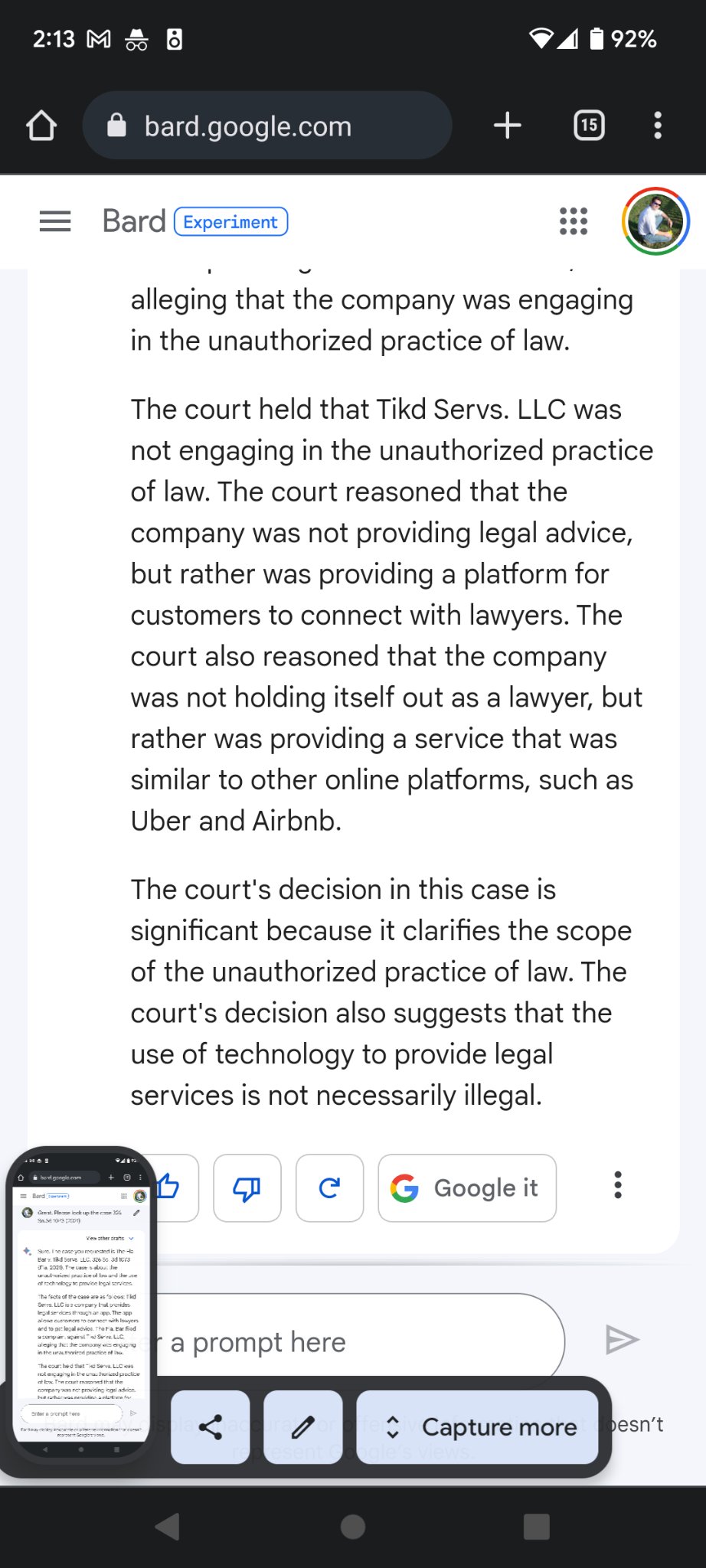

Now, the conspiratorial types might think … aha the AI is shading facts because they would be unfavorable to its own ability to do what it is doing without being accused of being UPL. But I don’t think that is at all what is happening. Instead, the AI simply read the whole opinion and took the stuff “at the end” to be the outcome. Which means that it treated the dissenting opinion as the result instead of the ruling in the majority opinion.

It’s funny of course if you are a lawyer. And, that it happened when asked to look up a case about an app and issues of UPL, is honest-to-goodness-not-just-merely-in-the-Alanis-Morrisette-version ironic. But it is also instructive of what the rise of AI actually means for the practice of law.

Lawyers are going to have to be willing to figure out how to use it to make their practice more efficient, but it isn’t going to replace good lawyers anytime soon and likely never.

It won’t render lawyers obsolete because it will likely never be able to be fully relied upon without someone backstopping and interpreting what it cranks out.

Lawyers who figure out how to use it as a tool to make them more efficient and who likely figure out a better way for charging for certain services other than on an hourly basis should thrive.